- Java Virtual Threads represent a significant advancement in Java concurrent programming. However, they don’t offer a clear advantage over Open Liberty’s existing autonomic thread pool for typical cloud-native Java workloads.

- For CPU-intensive workloads, virtual threads showed lower throughput than Open Liberty’s thread pool, for reasons that are not yet fully understood.

- Virtual threads ramp up from idle to maximum throughput faster than Open Liberty’s thread pool due to their thread-per-request model.

- The memory footprint in Open Liberty deployments can vary greatly based on application design, workload level, and garbage collection behavior. Thus, the reduced footprint of virtual threads might not lead to an overall reduction in memory usage.

- Virtual threads exhibited unexpected performance issues in certain use cases. We are working with the OpenJDK Community to investigate these issues further.

Introduction to Java Virtual Threads

The release of JDK 21 introduced Java Virtual Threads, a feature that promises to revolutionize how Java developers manage parallelism in their applications. The key goals of Java Virtual Threads include providing a lightweight, scalable, and user-friendly concurrency model, efficient utilization of system resources, and reducing the effort involved in writing, maintaining, and observing high-throughput concurrent applications (JEP 425).

Java Virtual Threads have generated significant interest among Java developers, including those using application frameworks like Open Liberty, a modular, open-source, cloud-native Java application runtime. As members of the Liberty performance engineering team, we evaluated whether this new Java capability could benefit our users or even replace the current thread pool logic used in the Liberty application runtime. At the very least, we wanted to understand virtual thread technology and its performance to offer informed guidance to Liberty users.

This article shares our findings, including:

- An overview of Java Virtual Thread implementation.

- An overview of current Liberty thread pool technology.

- Evaluation across some performance metrics, including unexpected observations.

- A summary of our findings.

Java Virtual Threads

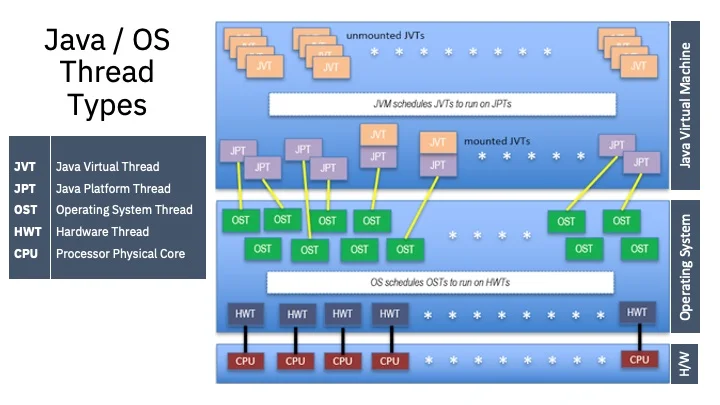

Java Virtual Threads were initially introduced in JDK 19, refined in JDK 20, and finalized in JDK 21 (as described in JDK Enhancement Proposal (JEP) 444). Traditionally, Java applications implemented a “thread-per-request” model, where each request is handled by a dedicated thread throughout its lifecycle. These threads (platform threads) are implemented as wrappers around operating system (OS) threads. However, OS threads consume substantial system memory and are scheduled by the OS layer, leading to scaling issues when deploying large numbers of threads.

Virtual threads aim to preserve the simplicity of the thread-per-request model while avoiding the high cost of dedicated OS threads. They minimize overhead by initially creating each thread as a lightweight object on the Java heap, using OS threads only when necessary. This “sharing” of OS threads allows better utilization of system resources. Theoretically, virtual threads enable developers to use “millions of threads” in a single JVM.

Open Liberty’s Autonomic Thread Pool

Open Liberty’s shared thread pool approach also reduces the cost of dedicated OS threads. Liberty uses shared threads (the “Liberty thread pool”) for application logic functions and separate threads for I/O functions. The Liberty thread pool is adaptive and sized autonomically. For most use cases, additional tuning is unnecessary, though minimum and maximum pool sizes can be configured.

Unlike a web server, Liberty is not just establishing idle I/O connections for long periods. Applications running on Liberty generally require CPU resources for business logic. A Liberty deployment typically uses a few hundred threads or fewer, especially in containers or pods with limited CPU allocations.

Performance Tests

We evaluated use cases and configurations commonly used by Liberty customers, using existing benchmark applications to compare Liberty’s thread pool and virtual threads’ performance. These benchmark applications utilize REST and MicroProfile, performing basic business logic during transactions.

We focused on configurations with tens to hundreds of threads to model what Liberty users would experience if we replaced the autonomic thread pool with virtual threads. Additionally, we compared Liberty’s thread pool and virtual threads with a few thousand threads, given virtual threads’ advertised strength in handling numerous threads.

Test Case Environment

We conducted performance tests using Eclipse Temurin (OpenJDK with HotSpot JVM) and IBM Semeru Runtimes (OpenJDK with OpenJ9 JVM). The performance differentials between Liberty’s thread pool and virtual threads were similar with both JDKs. Unless otherwise noted, results shown were produced using Liberty 23.0.0.10 GA with Temurin 21.0.1_12.

Disclaimer: Our evaluation focused on whether replacing Liberty’s autonomic thread pool with virtual threads using the “thread-per-request” model would benefit Liberty users. Results may differ for other application runtimes that lack a self-tuning thread pool like Liberty.

Test Case 1: CPU Throughput

Objective: Evaluate CPU throughput to determine if there is a performance loss when using virtual threads versus Liberty’s thread pool.

Findings: In some configurations, workloads experienced 10-40% lower throughput with virtual threads compared to Liberty’s thread pool.

We ran several CPU-intensive applications and compared how many transactions per second (tps) could be completed on a given number of CPUs running with virtual threads versus Liberty’s thread pool. Apache JMeter was used to drive loads to push the system to higher CPU utilization levels.

In one example, we ran an online banking app with a 2 ms delay to test virtual threads’ mount/unmount/remount functionality while maintaining CPU intensity. The load was gradually increased, with throughput measured over a stable 150-second period at each level.

At low load levels, virtual thread throughput matched Liberty’s thread pool throughput, with virtual threads consuming more CPU. As the load increased, transactions per second using virtual threads gradually fell behind Liberty’s thread pool.

Virtual threads may perform slower in CPU-intensive applications because they do not inherently accelerate code execution. Overheads include:

- Mounting and Unmounting: Virtual threads are mounted on a platform thread to run and unmounted at blocking points and upon completion. JVM Tool Interface (JVMTI) notifications are emitted for each mount/unmount action, incurring lightweight costs.

- Garbage Collection: Virtual thread objects are created and discarded for each transaction, incurring allocation and garbage collection costs.

- Loss of Thread-Linked Context: Liberty uses ThreadLocal variables to share information across requests. This efficiency is lost with virtual threads, as ThreadLocal disappears with the virtual thread. Major ThreadLocal uses were converted to non-thread-linked mechanisms, but some smaller-impact instances remain.

CPU profiling revealed that none of these virtual thread overheads were significant enough to explain the throughput discrepancy. Other possible causes are discussed in the “Unexpected Virtual Threads Performance Findings” section.

Virtual threads did not improve Java code execution speed compared to regular Java platform threads in the Liberty thread pool for a CPU-intensive application on a small number of CPUs.

Test Case 2: Ramp-Up Time

Objective: Quantify how quickly virtual threads reach full throughput compared to Liberty’s thread pool.

Findings: Under heavy load, applications using virtual threads reached maximum throughput faster than those using Liberty’s thread pool.

The virtual thread model allows each task to have its own thread, so our Liberty virtual threads prototype launched a new virtual thread for each received task. Thus, with virtual threads, every task immediately has a thread, while with Liberty’s thread pool, tasks might wait for thread availability.

To test this scenario, we used an online banking application with a lengthy response latency to saturate the CPU with thousands of simultaneous transactions, requiring thousands of threads.

Handling Thousands of Threads in Liberty’s Thread Pool

Liberty’s thread pool handled thousands of threads well. We observed slightly faster throughput (2-3%) with Liberty’s thread pool than with virtual threads, using about 10% less CPU and achieving 12-15% higher transactions per CPU utilization. Liberty thread pool autonomics allow pool growth to thousands of threads while maintaining stability.

Ramp-Up Time Using Liberty’s Thread Pool vs. Virtual Threads

In scaling evaluations, virtual threads ramped up quickly from low load to full capacity. Liberty’s thread pool ramp-up was slower, adjusting gradually based on throughput observations, taking tens of minutes to add threads as needed.

We modified Liberty’s thread pool autonomics to grow more aggressively when idle CPU resources are available, and the request queue is deep. This fix, available in Open Liberty 23.0.0.10 onwards, reduced ramp-up time to 20-30 seconds (from tens of minutes) when a heavy load was applied to the online banking app on Liberty’s thread pool, even with workloads requiring about 6,000 threads. The virtual threads prototype still ramped up quicker but with a reduced acceleration difference.

Test Case 3: Memory Footprint

Objective: Determine how much memory the Java process uses under constant load for both virtual threads and Liberty’s thread pool.

Findings: The smaller per-thread footprint of virtual threads had a relatively small effect when only a few hundred threads were required and could be outweighed by other JVM memory usages.

Virtual threads use less memory than traditional platform threads due to their lack of dedicated backing OS threads. This test measured how virtual threads’ per-thread memory advantage affected total JVM memory usage at typical Liberty workload levels, resulting in mixed outcomes.

We expected virtual threads to consistently use less memory. However, results varied: sometimes virtual threads used less memory, sometimes more.

This variability was due to factors beyond thread implementation, notably DirectByteBuffers (DBBs) used in Java networking infrastructure. DBBs consist of a small Java reference object on the heap and a larger native memory area. Java reference objects are garbage-collected after use, but associated native memory is retained until a global GC, causing potential memory footprint growth.

Note: Tests used a small minimum and large maximum heap size to highlight heap memory usage variability as a factor affecting JVM total memory usage.

In some cases where virtual threads used more memory, the difference was due to DirectByteBuffers retention. This does not indicate a virtual threads problem: Direct